Introduction

Activation functions are a fundamental component in the architecture of neural networks. They play a crucial role in determining the output of a neural network node (or “neuron”) and contribute to the ability of the network to model complex non-linear patterns. This article aims to explain the concept, types, and importance of activation functions in neural networks.

What are Activation Functions?

In the simplest terms, an activation function is a mathematical equation that determines whether a neuron should be activated or not. It does this by taking a neuron’s input and computing an output signal that is passed on to the next layer of neurons. The purpose of an activation function is to introduce non-linearities into the network which allows it to learn and perform more complex tasks.

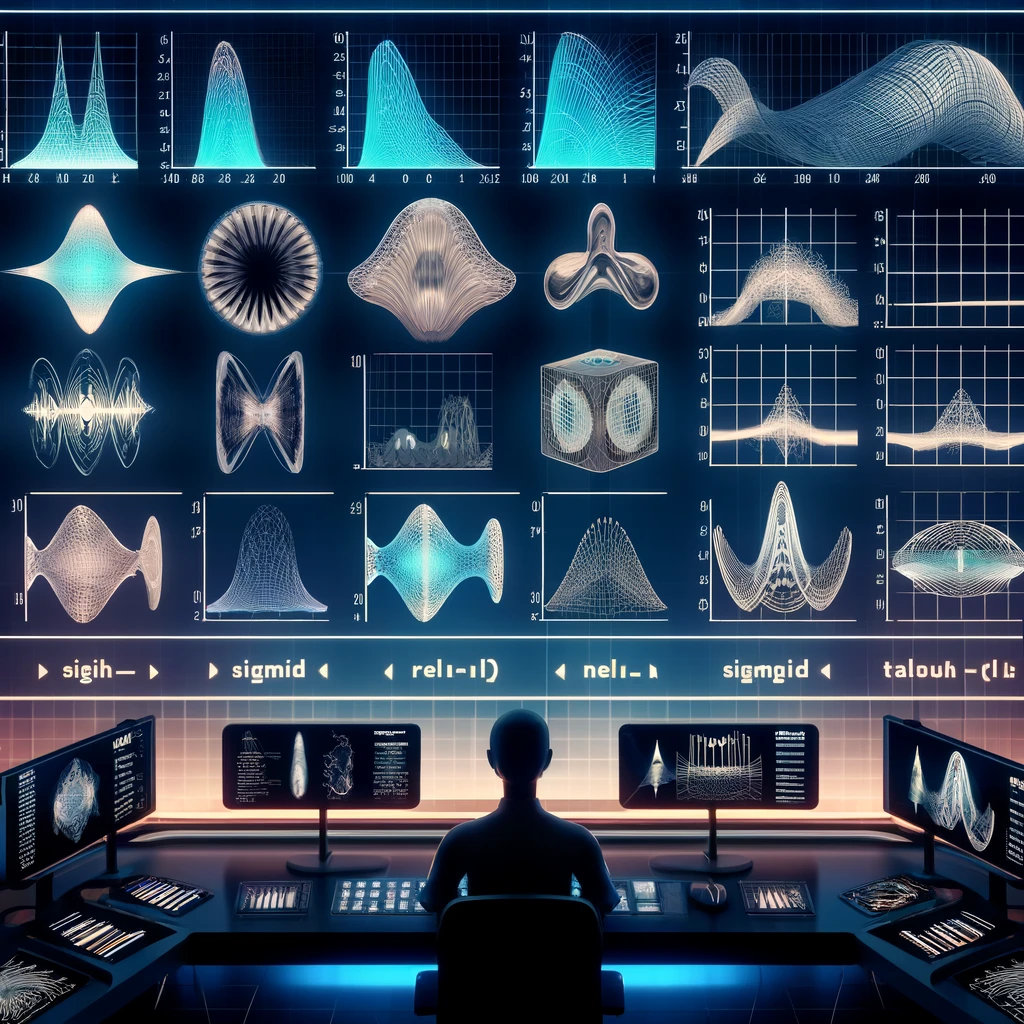

Types of Activation Functions

- Linear Activation Function: This function is a straight line function where the activation is proportional to the input (except for when the input is zero). It is rarely used in practice because it does not allow the model to learn complex patterns in the data.

- Sigmoid Activation Function: It is a S-shaped function which is defined as �(�)=11+�−�S(x)=1+e−x1. It is primarily used for models where we have to predict the probability as an output since the output lies between 0 and 1.

- ReLU (Rectified Linear Unit) Function: This is one of the most commonly used activation functions in neural networks, especially in CNNs. It is defined as �(�)=���(0,�)R(x)=max(0,x). It allows only positive values to pass through it, and zeros out negative values.

- Tanh (Hyperbolic Tangent) Function: It is similar to the sigmoid function but it normalizes the output to range between -1 and 1. Thus, it is generally better than sigmoid because it centers the output, improving the efficiency of the backpropagation algorithm.

- Leaky ReLU: It is a variant of ReLU, designed to solve the problem of “dying neurons” in a ReLU-based network. It allows a small, non-zero gradient when the unit is not active and the input is less than zero.

- Softmax Function: Often used in the output layer of a classifier, where the result is required to represent the probability distribution of a list of potential outcomes.

Importance of Activation Functions

Activation functions define the output of a neuron to complex inputs, thus helping to determine what aspects of the input data are important to learn and which are irrelevant. They help decide whether a neuron should fire in the forward propagation phase and also influence backpropagation by deciding how and when to update weights efficiently.

Conclusion

The choice of an activation function can significantly influence the performance of a neural network. It affects how fast the network can learn and the accuracy of the outputs it produces. While ReLU is currently the most popular due to its computational simplicity and efficiency, the choice of activation function ultimately depends on the specific requirements and characteristics of each task. As research progresses, new activation functions that can lead to more efficient training and higher performance are continually being explored and developed.